Introducing Edgee AI Gateway: one API for LLMs, with routing, observability, and privacy controls

Dear friends,

Over the last months, we’ve seen a pattern repeat across CTOs and platform teams building AI features in production:

- The "LLM layer" is becoming a product of its own: routing, retries, evals, budgets, logs, redaction, governance.

- Provider sprawl is real: OpenAI today, Anthropic tomorrow, open models next week, and a long tail after that.

- Reliability and cost are moving targets: outages happen, pricing changes, latency varies by region and vendor.

And yet, most teams still integrate LLMs the old way, with direct calls scattered across services, ad‑hoc fallbacks, and partial visibility.

That’s why we built Edgee AI Gateway.

What is an AI Gateway?

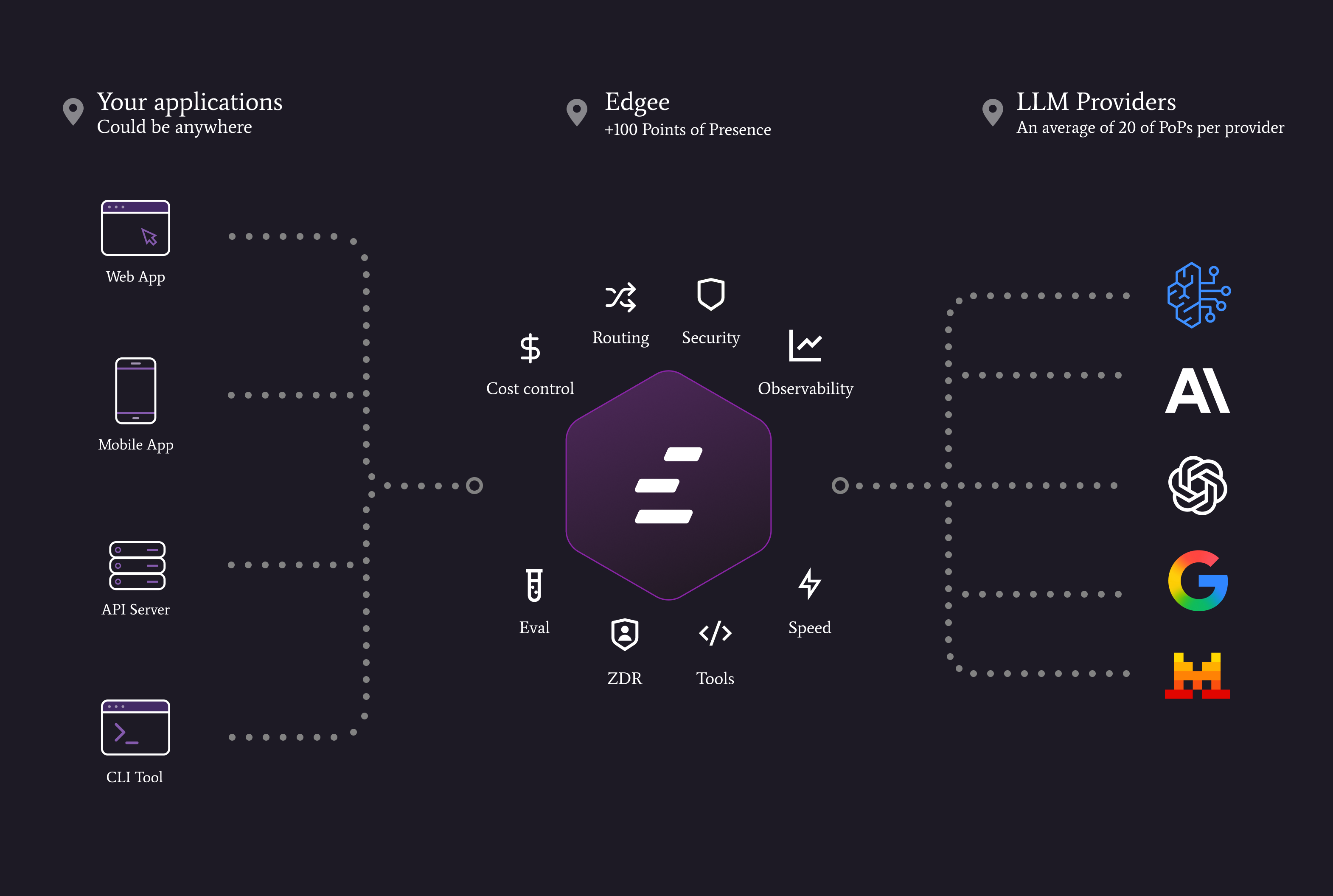

An AI Gateway is the layer that sits between your applications and LLM providers.

It gives you one place to implement:

- Routing policies (cost, latency, quality, region, allowlists)

- Fallbacks and retries (without duplicating logic across services)

- Observability (latency, tokens, errors, cost — end to end)

- Controls (budgets, rate limits, key management)

- Privacy controls (configurable logging and retention)

In short: it’s how you turn "LLM calls" into a production-grade system.

Introducing Edgee AI Gateway

Edgee AI Gateway is a unified API for LLM providers with built‑in routing, observability, and privacy controls, designed for teams running AI in production.

Here’s what you can expect.

Instead of thinking "which LLM do we use?", we want you to think "what policies do we want?" and then implement them once.

With Edgee AI Gateway, your app speaks a single OpenAI‑compatible API. That’s important for a very pragmatic reason: it keeps your integration stable while your provider choices evolve. It also drastically reduces the little operational paper cuts: vendor-specific edge cases, different error formats, slightly different streaming behaviors, and so on.

Then comes the part that usually turns into a mess in large codebases: routing. In production, the "best model" is not a fixed answer. It depends on the endpoint, the user’s geography, your latency SLOs, your cost constraints, and sometimes even your compliance posture. So we built Edgee AI Gateway to let you express those constraints as routing policies instead of hard-coding "if provider A fails then try provider B" in ten different services.

And because the real world is never perfect, the gateway needs to be reliable by default. Providers will have incidents. Timeouts happen. Rate limits happen. Edgee AI Gateway is designed to give you sane, consistent failure modes, with fallbacks and retries that don’t leak complexity into your application logic. The goal is simple: your product keeps working even when a provider has a bad day.

Of course, none of this matters if you can’t see what’s going on. We’re building the gateway with a very opinionated view of observability: you should be able to answer, quickly and confidently, questions like "what’s our latency by provider?", "where are errors coming from?", "what is this feature costing us?", and "which route is drifting in cost or quality?". That’s why the gateway captures the signals you actually need (latency, errors, usage, cost) and makes them exportable.

Privacy is the other non-negotiable. Different teams have different requirements, and even within a single company the right posture varies by workload. So we focus on configurable privacy controls: you decide what is logged, how long it is retained, and how prompts/outputs should be handled in your observability pipeline.

Finally, we know some organizations want the benefits of a gateway while keeping direct billing relationships and provider-specific account controls. That’s why we support BYOK (Bring Your Own Keys): keep using your provider keys, and still use Edgee for routing, observability, budgets, and controls, without rewriting your integration.

Get started

If you’re building AI features in production and you want a simpler way to manage providers, reliability, cost, and privacy:

More soon; we’ll share concrete examples of routing policies, incident playbooks, and the observability dashboards we wish every team had from day one.